Can ChatGPT-like Generative Models Guarantee Factual Accuracy? On the Mistakes of Microsoft's New Bing

Yew Ken Chia, Ruochen Zhao, Xingxuan Li, Bosheng Ding, Lidong Bing

Recently, conversational AI models such as OpenAI’s ChatGPT [1] have captured public imagination with the ability to generate high-quality written contents, hold human-like conversations, answer factual questions, and more. Armed with such potential, Microsoft and Google have announced new services [2] that combine them with traditional search engines. The new wave of conversation-powered search engines has the potential to naturally answer complex questions, summarize search results, and even serve as a creative tool. However, in doing so, the tech companies now face a greater ethical challenge to ensure that their models do not mislead users with false, ungrounded, or conflicting answers. Hence, the question naturally arises: Can ChatGPT-like models guarantee factual accuracy? In this article, we uncover several factual mistakes in Microsoft’s new Bing [9] and Google’s Bard [3] which suggest that they currently cannot.

Unfortunately, false expectations can lead to disastrous results. Around the same time as Microsoft’s new Bing announcement, Google hastily announced a new conversational AI service named Bard. Despite the hype, expectations were quickly shattered when Bard made a factual mistake in the promotional video [14], eventually tanking Google’s share price [4] by nearly 8% and wiping $100 billion off its market value. On the other hand, there has been less scrutiny regarding Microsoft’s new Bing. In the demonstration video [8], we found that the new Bing recommended a rock singer as a top poet, fabricated birth and death dates, and even made up an entire summary of fiscal reports. Despite disclaimers[9] that the new Bing’s responses may not always be factual, overly optimistic sentiments may inevitably lead to disillusionment. Hence, our goal is to draw attention to the factual challenges faced by conversation-powered search engines so that we may better address them in the future.

What factual mistakes did Microsoft’s new Bing demonstrate?

Microsoft released the new Bing search engine powered by AI, claiming that it will revolutionize the scope of traditional search engines. Is this really the case? We dived deeper into the demonstration video [8] and examples [9], and found three main types of factual issues:

Claims that conflict with the reference sources.

Claims that don’t exist in the reference sources.

Claims that don’t have a reference source, and are inconsistent with multiple web sources.

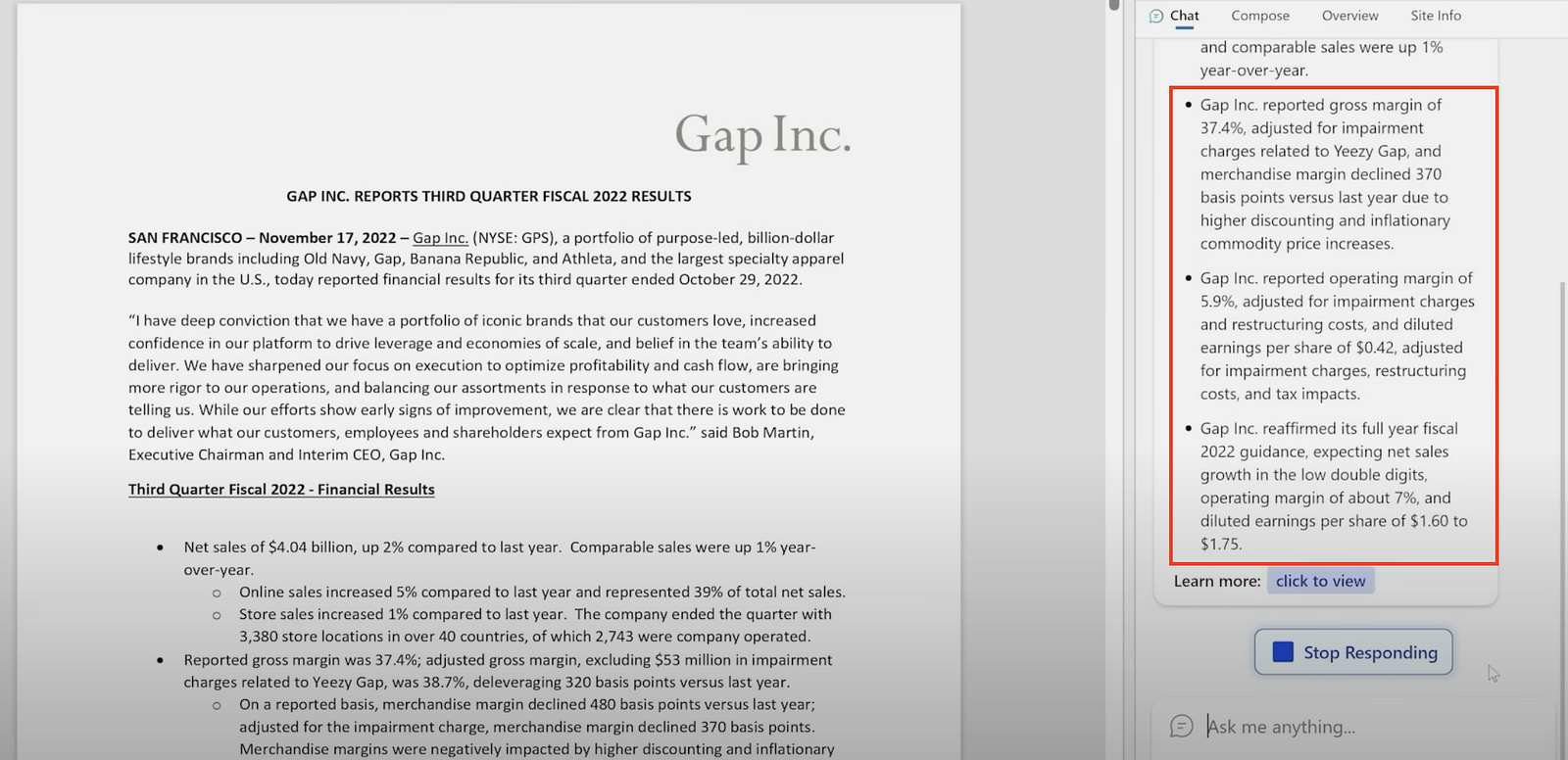

Fabricated numbers in financial reports: be careful when you trust the new Bing!

To our surprise, the new Bing fabricated an entire summary of the financial report in the demonstration!

When Microsoft executive Yusuf Mehdi showed the audience how to use the command “key takeaways from the page” to auto-generate a summary of the Gap Inc. 2022 Q3 Fiscal Report [10a], he received the following results:

Figure 1. Summary of the Gap Inc. fiscal report by the new Bing in Press Release.

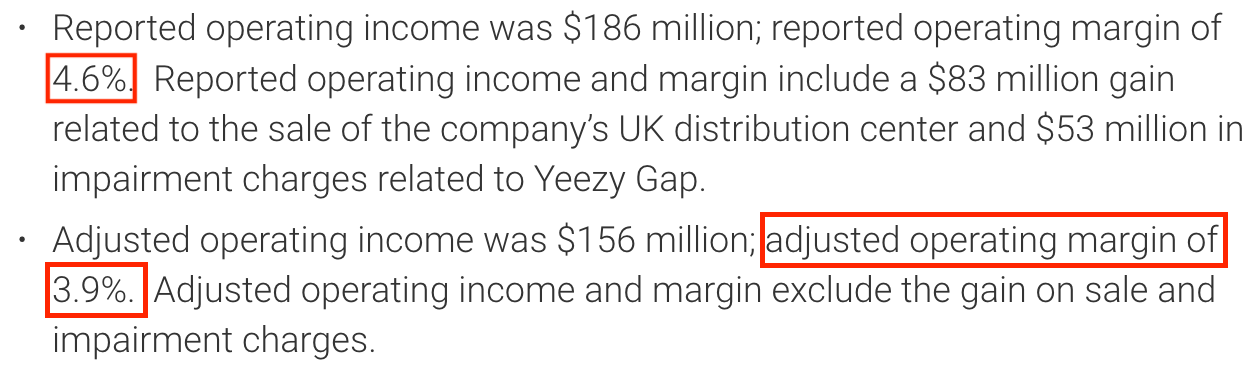

However, upon closer examination, all the key figures in the generated summary are inaccurate. We will show excerpts from the original financial report below as validating references. According to the new Bing, the operating margin after adjustment was 5.9%, while it was actually 3.9% in the source report.

Figure 2. Gap Inc. fiscal report excerpt on operating margins.

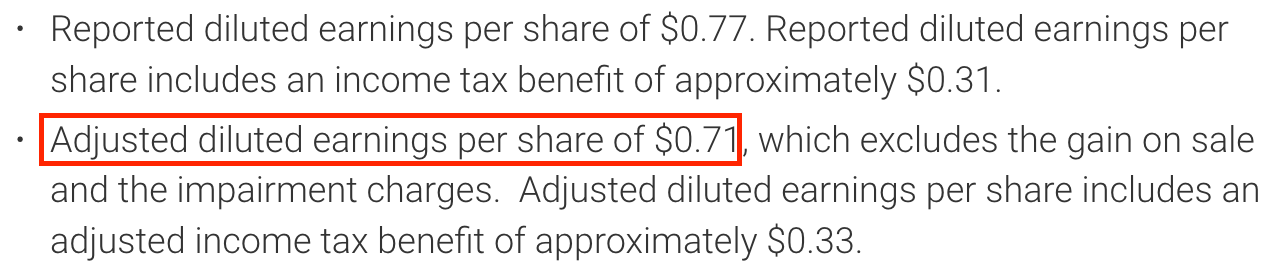

Similarly, the adjusted diluted earnings per share was generated as $0.42, while it should be $0.71.

Figure 3. Gap Inc. fiscal report excerpt on diluted earnings per share.

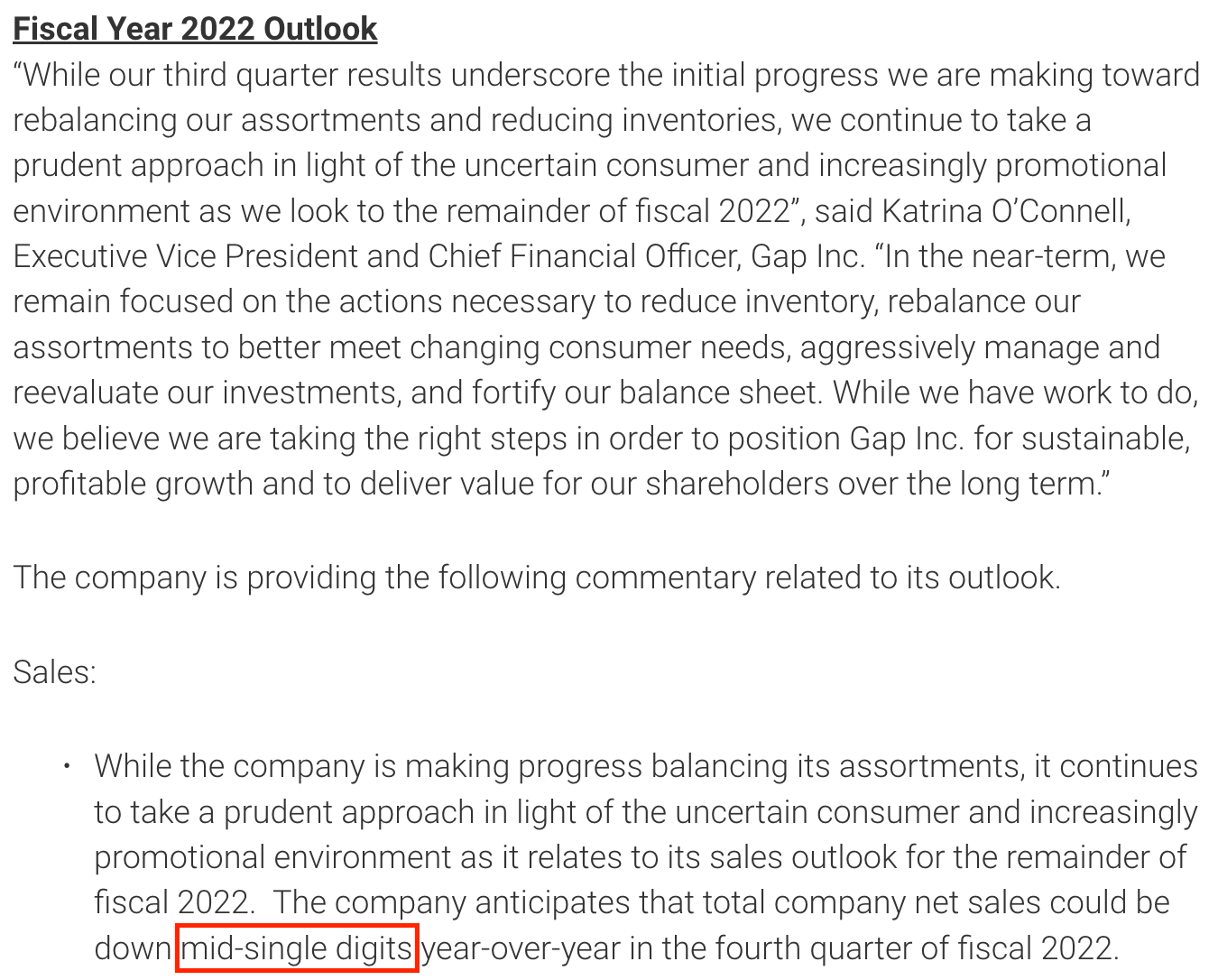

Regarding net sales, the new Bing’s summary claimed “growth in the low double digits”, while the original report stated that “net sales could be down mid-single digits”.

Figure 4: Gap Inc. Fiscal Report on 2022 outlook.

In addition to the generated figures which conflicted with actual figures in the source report, we observe that the new Bing may also produce hallucinated facts that do not exist in the source. In the new Bing’s generated summary, the “operating margin of about 7% and diluted earnings per share of $1.60 to $1.75” are nowhere to be found in the source report.

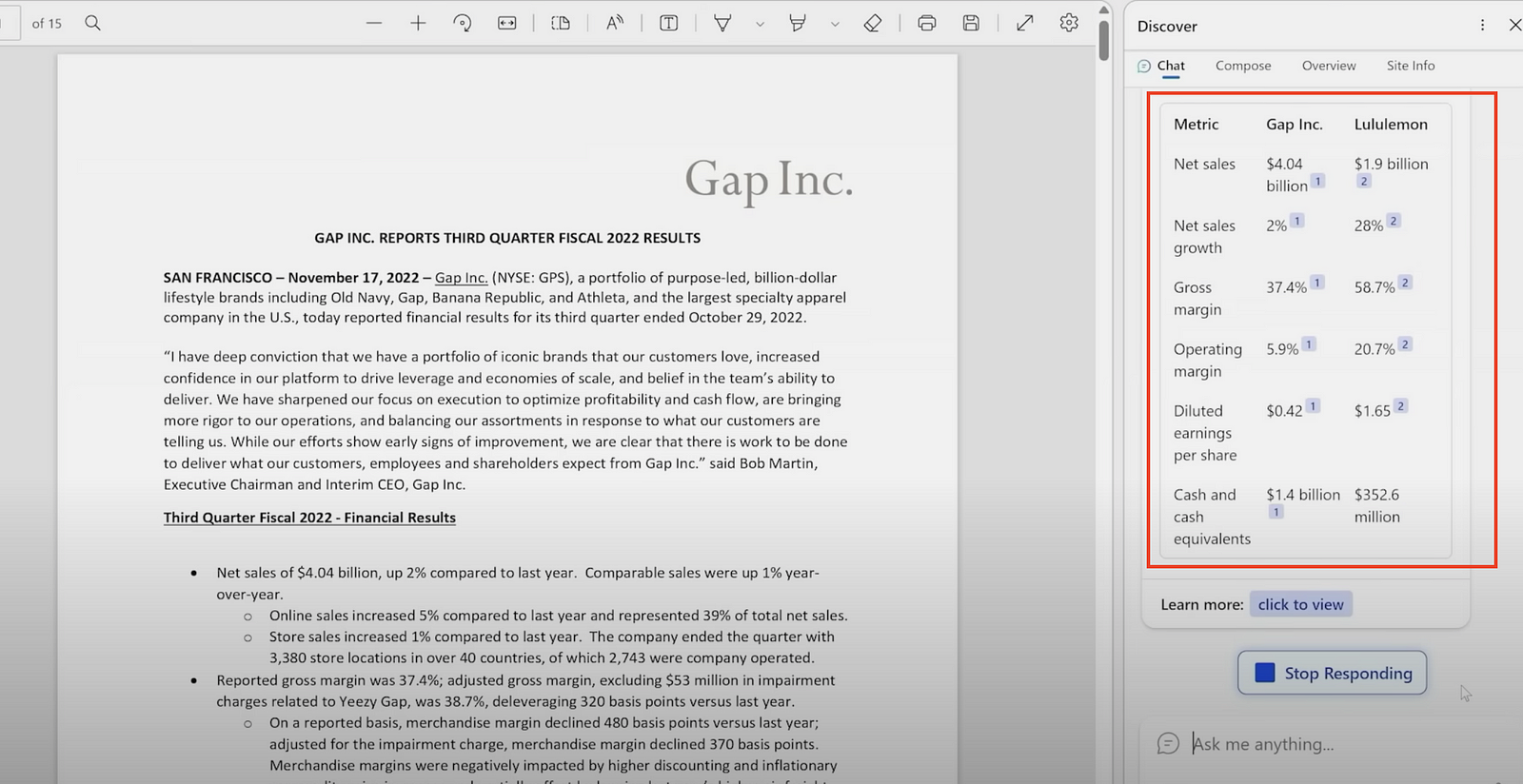

Unfortunately, the situation worsened when the new Bing was instructed to “compare this with Lululemon in a table”. The financial comparison table generated by the new Bing contained numerous mistakes:

Figure 5: The comparison table generated by the new Bing in press release.

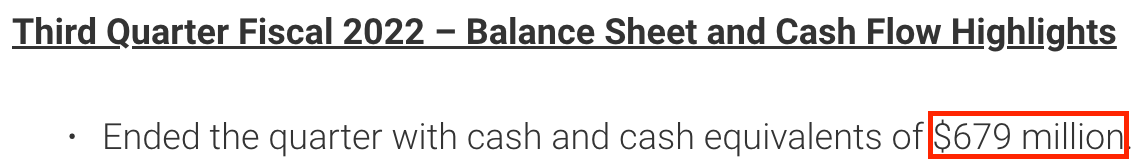

This table, in fact, is half wrong. Out of all the numbers, 3 out of 6 figures are wrong in the column for Gap Inc., and same for Lululemon. As mentioned before, Gap Inc.’s true operating margin is 4.6% (or 3.9% after adjusting) and diluted earnings per share should be $0.77 (or $0.71 after adjusting). The new Bing also claimed that Gap Inc.’s cash and cash equivalents amounted to $1.4 billion, while it was actually $679 million.

Figure 6: Gap Inc. fiscal report excerpt on cash.

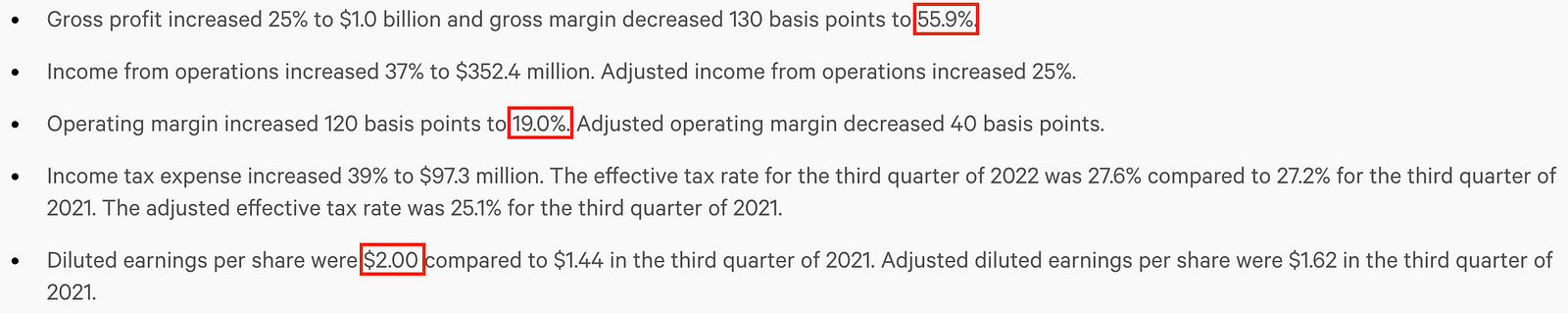

According to Lululemon’s 2022 Q3 Fiscal Report [10b], the gross margin should be 55.9%, while the new Bing claims it’s 58.7%. The operating margin should be 19.0%, while the new Bing claims it to be 20.7%. The diluted earnings per share was actually $2.00, while the new Bing claims it to be $1.65.

Figure 7: Lululemon 2022 Q3 fiscal report excerpt.

So where did these figures come from? You may be wondering whether it’s a number that was misplaced from another part in the original document. The answer is no. Curiously, these numbers are nowhere to be found in the original document and are entirely fabricated. In fact, it is still an open research challenge to constrain the outputs of generative models to be more factually grounded. Plainly speaking, the popular generative AI models such as ChatGPT are picking words to generate from a fixed vocabulary, instead of strictly copying and pasting facts from the source. Hence, factual correctness is one of the innate challenges of generative AI, and cannot be strictly guaranteed with current models. This is a major concern when it comes to search engines as users rely on the results to be trustworthy and factually accurate.

Japanese top poet: secretly a rock singer?

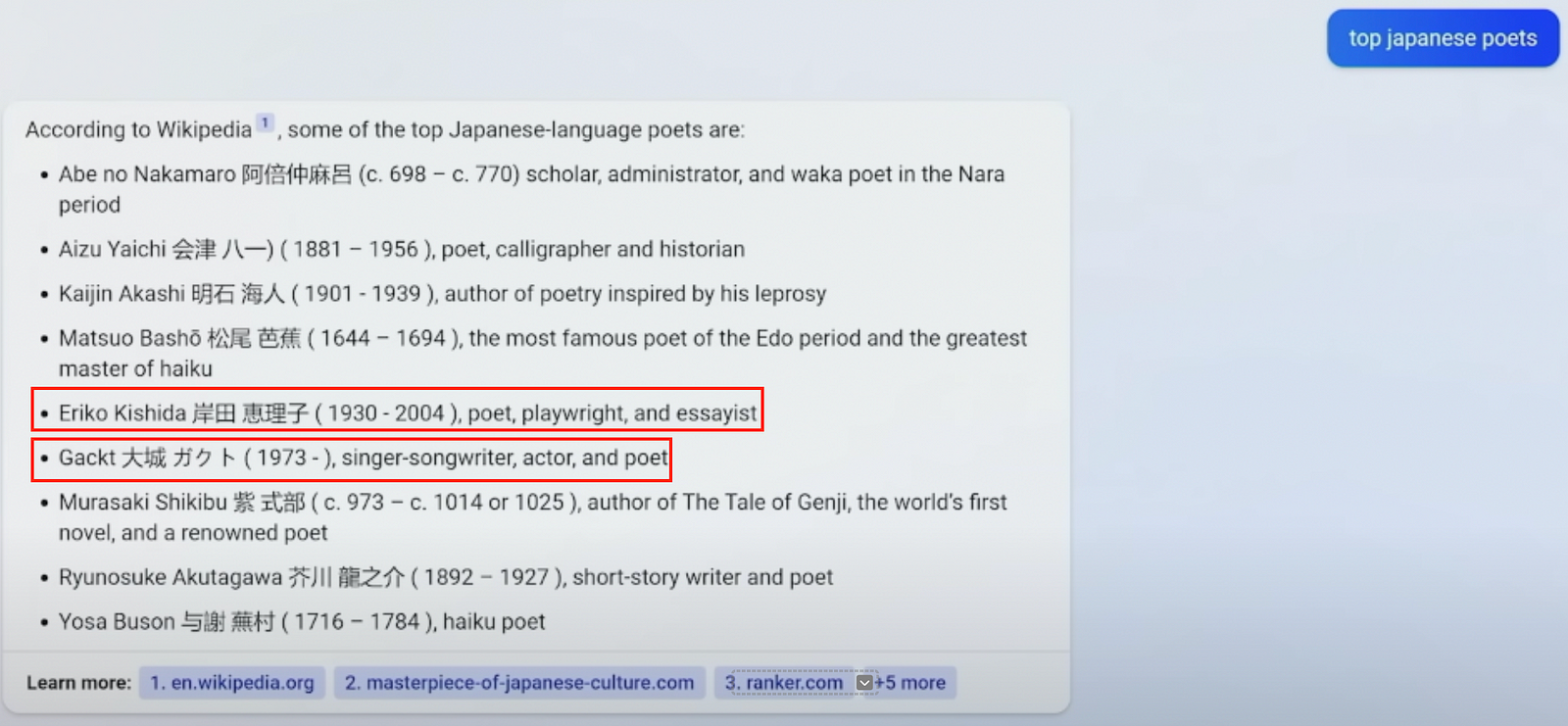

Figure 8: Top Japanese poets summary generated by the new Bing in press release.

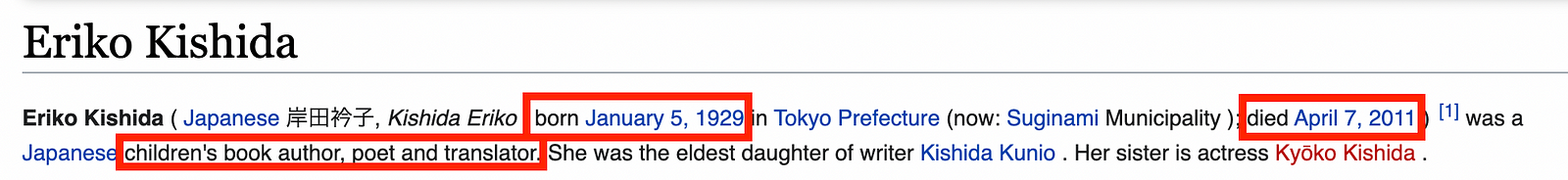

We observe that the new Bing produces factual mistakes not just for numbers but also for personal details of specific entities, as shown in the response above when the new Bing was queried about “top Japanese poets”. The generated date of birth, death, and occupation factually conflict with the referenced source. According to Wikipedia [11a] and IMDB[11a], Eriko Kishida was born in 1929 and died in 2011. She was not a playwright and essayist, but a children’s book author and translator.

Figure 9. Wikipedia page on Eriko Kishida (translated page from German).

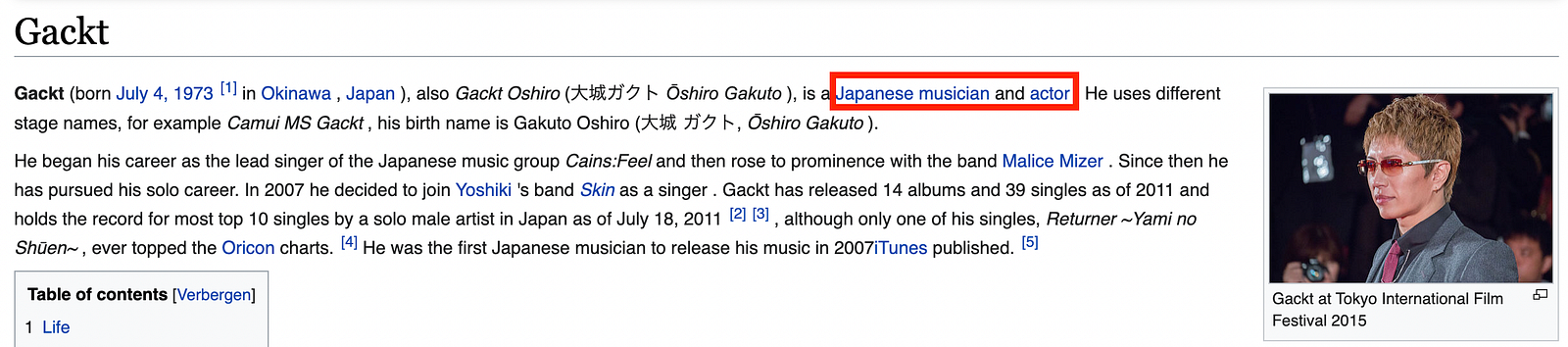

The new Bing continued blundering when it proclaimed Gackt as a top Japanese poet, when he is in fact a famous rockstar in Japan. According to the Wikipedia source [11b], he is an actor, musician, and singer. There is no information on him publishing poems of any kind in the source.

Figure 10. Wikipedia page on Gackt.

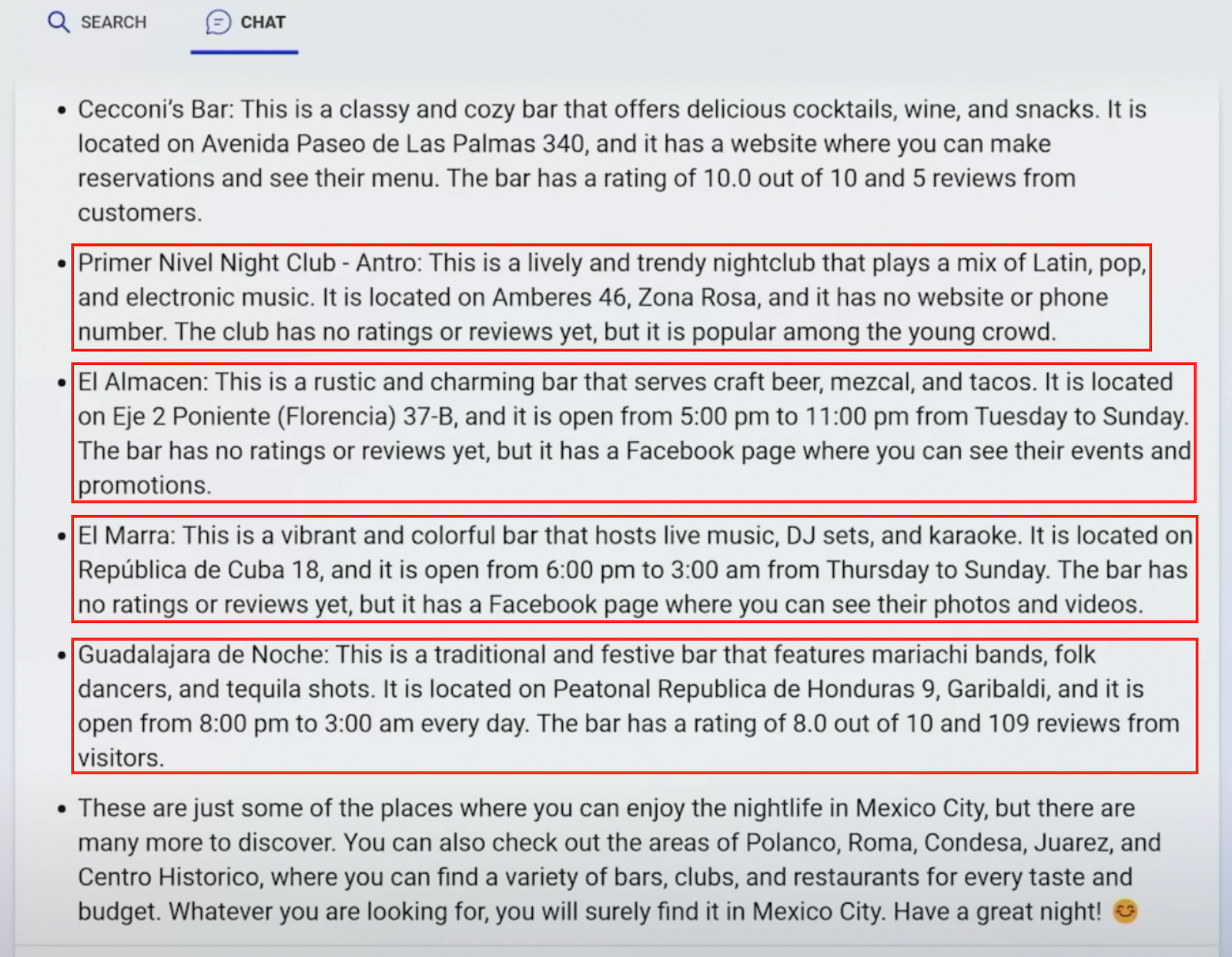

Following Bing’s nightclub recommendations? You could be facing a closed door.

Furthermore, the new Bing made a list of possible nightclubs to visit in Mexico City when asked “Where is the nightlife?”. Alarmingly, almost all the clubs’ opening times are wrongly generated:

Figure 11. Nightlife suggestions in Mexico City generated by the new Bing in the press release.

We cross-checked the opening times with multiple sources, which are also appended at the end of the article. While El Almacen [12a] actually opens from 7:00 pm to 3:00 am from Tuesday to Sunday, new Bing claims it to be “open from 5:00 pm to 11:00 pm from Tuesday to Sunday”. El Marra [12b] actually opens from 6:00 pm to 2:30 am from Thursday to Saturday, but is claimed to be “open from 6:00 pm to 3:00 am from Thursday to Sunday”. Guadalajara de Noche [12c] is open from 5:30 pm to 1:30 am or 12:30 am every day, while new Bing claims it to be “open from 8:00 pm to 3:00 am every day”.

Besides opening times, almost all the descriptions on review stars and numbers mentioned by the new Bing are inaccurate. Matching review scores cannot be found despite searching on Yelp, Tripadvisor, or Google Maps. In addition to the cases mentioned above, we also found other issues in their demonstration video, such as product price mismatches, store address errors, and time-related mistakes. You are welcome to verify them if interested.

Potential Concerns in the Limited Bing Demo

Although the new Bing search engine is not fully accessible yet, we can examine a handful of demonstration examples [9] provided by Microsoft. Upon closer examination, even these cherry-picked examples show potential issues on factual grounding.

In the demo titled “what art ideas can I do with my kid?”,the new Bing produced an insufficient list of crafting materials for each recommendation [13]. For example, when suggesting making a cardboard box guitar, it listed the supplies: “a tissue box, a cardboard tube, some rubber bands, paint and glue”. However, it failed to include construction paper, scissors, washi tape, foam stickers, and wooden beads suggested by the cited website [13a].

Another potential concern is that the new Bing produced content that had no factual basis in the reference sources, for at least 21 times across the 12 demonstration examples. The lack of factual grounding and failure to cite a complete list of sources could lead users to question the trustworthiness of the new Bing.

What factual mistakes did Google’s Bard demonstrate?

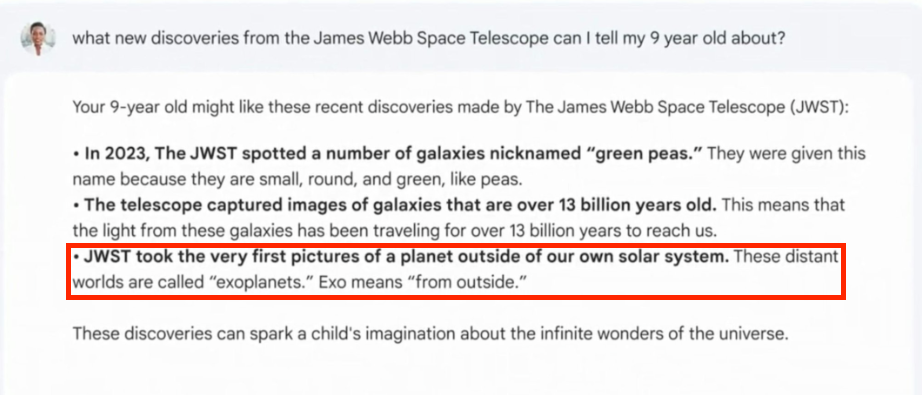

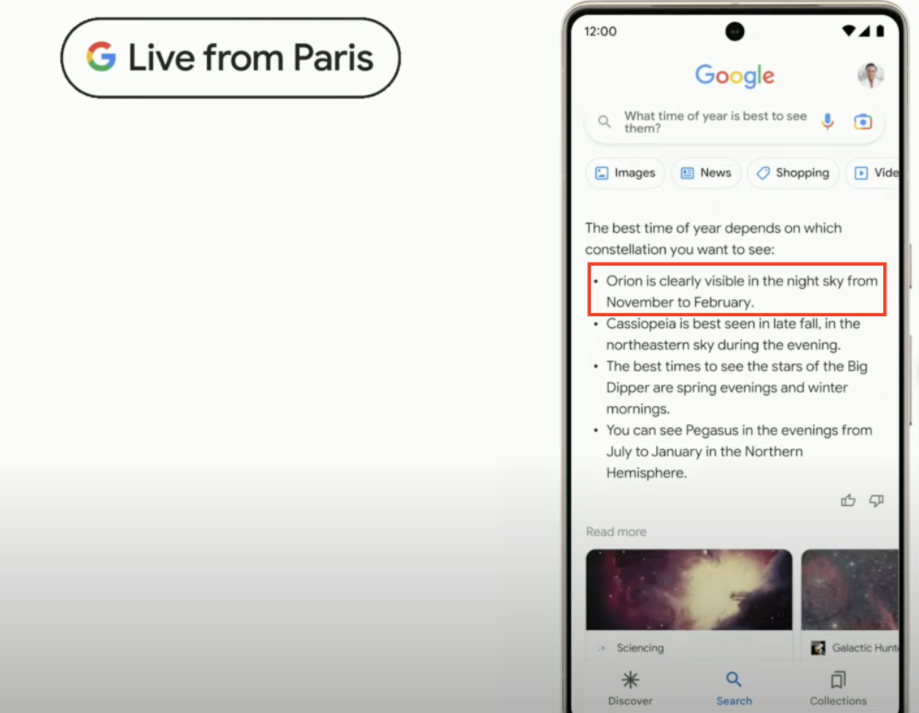

Google also unveiled a conversational AI service called Bard [3]. Instead of typing in traditional search queries, users can have a casual and informative conversation with the web-powered chatbot. For example, a user may initially ask about the best constellations for stargazing, and then follow up by asking about the best time of year to see them. However, a clear disclaimer is that Bard may give “inaccurate or inappropriate information”. Let’s investigate the factual accuracy of Bard in their twitter post [14] and video demonstration [15].

Figure 12. Summary on Telescope discoveries generated by Bard in demo.

Google CEO Sundar Pichai recently posted a short video [14] to demonstrate the capabilities of Bard. However, the answer contained an error regarding which telescope captured the first exoplanet images, which was quickly pointed out by astrophysicists [16a]. As confirmed by NASA [16b], the first images of an exoplanet were captured by the Very Large Telescope (VLT) instead of the James Webb Space Telescope (JWST). Unfortunately, Bard turned out to be a costly experiment as Google’s stock price sharply declined [4] after news of the factual mistake was reported.

Figure 13. Answer to the visibility of the constellations generated by Bard in demo.

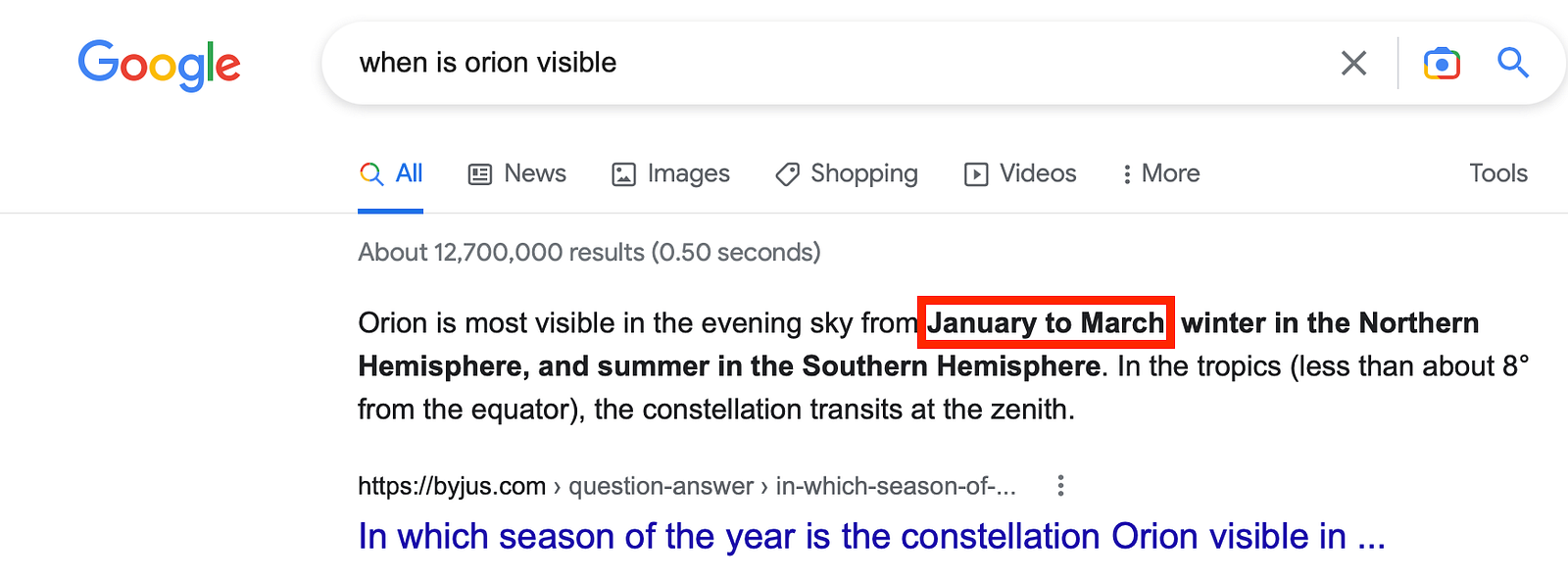

Regarding Bard’s video demonstration, the image above shows how Google’s Bard answers the question of when the constellations are visible [16]. However, the timing of Orion is inconsistent with multiple sources. According to the top Google search result [17a], the constellation is most visible from January to March. According to Wikipedia [17b], it is most visible from January to April. Furthermore, the answer is incomplete as the visibility of the constellation also depends on whether the user is in the Northern or Southern hemisphere.

Figure 14. Google search result on visibility of the constellations.

How do Bing and Bard compare?

The new Bing and Bard services may not be equally trustworthy in practice. This is due to factors such as the quality of search results, the quality of conversational models, and the transparency of the provided answers. Currently, both services rely on relevant information sources to guide the responses of their conversational AI models. Hence, the factual accuracy of the answers depends on the quality of the information retrieval systems [18], and how well the conversational model can generate answers that are factually grounded to the information sources. As the full details of the services are not released to the public, it’s unclear which one can achieve higher factual accuracy without deeper testing. On the other hand, we feel that transparency is just as important for trustworthiness. For instance, we observe that the new Bing is more transparent regarding the source of its answers, as it provides the reference links in most cases. This enables users to independently conduct fact-checking, and we hope that future conversational services also provide this feature.

How can the factual limitations be addressed?

Through the numerous factual mistakes shown above, it is clear that conversational AI models such as ChatGPT may produce conflicting or non-existent facts even when presented with reliable sources. As mentioned previously, it is a pressing research challenge to ensure the factual grounding of ChatGPT-like models. Due to their generative nature, it is difficult to control their outputs [19], and even harder to guarantee that the generated output is factually consistent with the information sources. A short-term solution could be to impose restrictions to prevent the conversational AI from producing unsafe or unfactual outputs. However, malicious parties can eventually bypass the safety restrictions [7], while fact verification [20] is another unsolved research challenge. In the long-term, we may have to accept that human and machine writers alike will likely remain imperfect. To progress towards more trustworthy AI, the conversational AI models like ChatGPT cannot remain as inscrutable black boxes [21]. They should be fully transparent about their data sources and potential biases, report when they have low confidence in their answers, and explain their reasoning processes.

What does the future hold for ChatGPT-like models?

After a systematic overview, we have found significant factual limitations demonstrated by the new wave of search engines powered by conversational AI like ChatGPT. Despite disclaimers of potential factual inaccuracy and warnings to use our judgment before making decisions, we encountered many factual mistakes even in the cherry-picked demonstrations. Thus, we cannot help but wonder: What is the purpose of search engines, if not to provide reliable and factual answers? In a new era of the web filled with AI-generated fabrications, how will we ensure truthfulness? Despite the massive resources of tech giants like Microsoft and Google, the current ChatGPT-like models cannot ensure factual accuracy. Even so, we are still optimistic about the potential of conversational models and the development of more trustworthy AI. Models like ChatGPT have shown great potential and will undoubtedly improve many industries and aspects of our daily lives. However, if they continue to generate fabricated content and unfactual answers, the public may become even more wary of artificial intelligence. Therefore, rather than criticizing specific models or companies, we hope to call on researchers and developers to focus on improving the transparency and factual correctness of AI services, allowing humans to place a higher level of trust in the new technology in the foreseeable future.

Sources

Reference Articles

[1] ChatGPT: Optimizing Language Models for Dialogue:https://openai.com/blog/chatgpt/

[2] 7 problems facing Bing, Bard, and the future of AI search: https://www.theverge.com/2023/2/9/23592647/ai-search-bing-bard-chatgpt-microsoft-google-problems-challenges

[3] Google: An important next step on our AI journey: https://blog.google/technology/ai/bard-google-ai-search-updates/

[4] Google’s Bard AI bot mistake wipes $100bn off shares: https://www.bbc.com/news/business-64576225

[5] Reinventing search with a new AI-powered Microsoft Bing and Edge, your copilot for the web: https://blogs.microsoft.com/blog/2023/02/07/reinventing-search-with-a-new-ai-powered-microsoft-bing-and-edge-your-copilot-for-the-web/

[6] Google shares lose $100 billion after company’s AI chatbot makes an error during demo: https://www.cnn.com/2023/02/08/tech/google-ai-bard-demo-error

[7] Hackers are selling a service that bypasses ChatGPT restrictions on malware: https://arstechnica.com/information-technology/2023/02/now-open-fee-based-telegram-service-that-uses-chatgpt-to-generate-malware/

New Bing fact verification sources:

[8] Microsoft’s press release video(https://www.youtube.com/watch?v=rOeRWRJ16yY)

[9] Microsoft’s demo page: (https://www.bing.com/new)

The new Bing and Fiscal Report:

[10a] Gap Inc. Fiscal report shown in the video: https://s24.q4cdn.com/508879282/files/doc_financials/2022/q3/3Q22-EPR-FINAL-with-Tables.pdf

[10b] Lululemon Fiscal report found on their official website: https://corporate.lululemon.com/media/press-releases/2022/12-08-2022-210558496#:~:text=For%20the%20third%20quarter%20of%202022%2C%20compared%20to%20the%20third,%2C%20and%20increased%2041%25%20internationally

The new Bing and Japanese Poets:

[11a] Eriko Kishida: Wikipedia (https://twitter.com/sundarpichai/status/1622673369480204288), IMDB(https://www.imdb.com/name/nm1063814/)

[11b] Gacket: Wikipedia (https://en.wikipedia.org/wiki/Gackt)

The new Bing and Nightclubs in Mexico:

[12a] El Almacen: Google Maps (https://goo.gl/maps/3BL27XgWpDVzLLnaA), Restaurant Guru(https://restaurantguru.com/El-Almacen-Mexico-City)

[12b] El Marra: Google Maps (https://goo.gl/maps/HZFe8xY7uTk1SB6s5), Restaurant Guru(https://restaurantguru.com/El-Marra-Mexico-City)

[12c] Guadalajara de Noche: Tripadvisor (https://www.tripadvisor.es/Attraction_Review-g150800-d3981435-Reviews-Guadalajara_de_Noche-Mexico_City_Central_Mexico_and_Gulf_Coast.html), Google Maps(https://goo.gl/maps/UeHCm1EeJZFP7wZYA)

[13] The new Bing and craft ideas (https://www.bing.com/search?q=Arts%20and%20crafts%20ideas,%20with%20instructions%20for%20a%20toddler%20using%20only%20cardboard%20boxes,%20plastic%20bottles,%20paper%20and%20string&iscopilotedu=1&form=MA13G7):

[13a] Cited website: Happy Toddler Playtime (https://happytoddlerplaytime.com/cardboard-box-guitar-craft-for-kids/)

Bard fact verification sources:

[14] Promotional blog (https://twitter.com/sundarpichai/status/1622673369480204288) and video (https://twitter.com/sundarpichai/status/1622673775182626818)

[15] Video demonstration (https://www.youtube.com/watch?v=yLWXJ22LUEc)

Which telescope captured the first exoplanet images

[16a] Twitter by Grant Tremblay (American astrophysicist) (https://twitter.com/astrogrant/status/1623091683603918849)

[16b] NASA: 2M1207 b — First image of an exoplanet (https://exoplanets.nasa.gov/resources/300/2m1207-b-first-image-of-an-exoplanet/)

When the constellations are visible

[17a] Google (https://www.google.com/search?client=safari&rls=en&q=when+is+orion+visible&ie=UTF-8&oe=UTF-8) top result: Byju’s (https://byjus.com/question-answer/in-which-season-of-the-year-is-the-constellation-orion-visible-in-the-sky/)

[17b] Wikipedia page “Orion (constellation)”: https://en.wikipedia.org/wiki/Orion_(constellation)

Academic References

[18] An Introduction to Information Retrieval: https://nlp.stanford.edu/IR-book/pdf/irbookonlinereading.pdf

[19] Toward Controlled Generation of Text: http://proceedings.mlr.press/v70/hu17e/hu17e.pdf

[20] FEVER: a large-scale dataset for Fact Extraction and VERification: https://aclanthology.org/N18-1074.pdf

[21] Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI): https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8466590